Thursday 10 April 2025

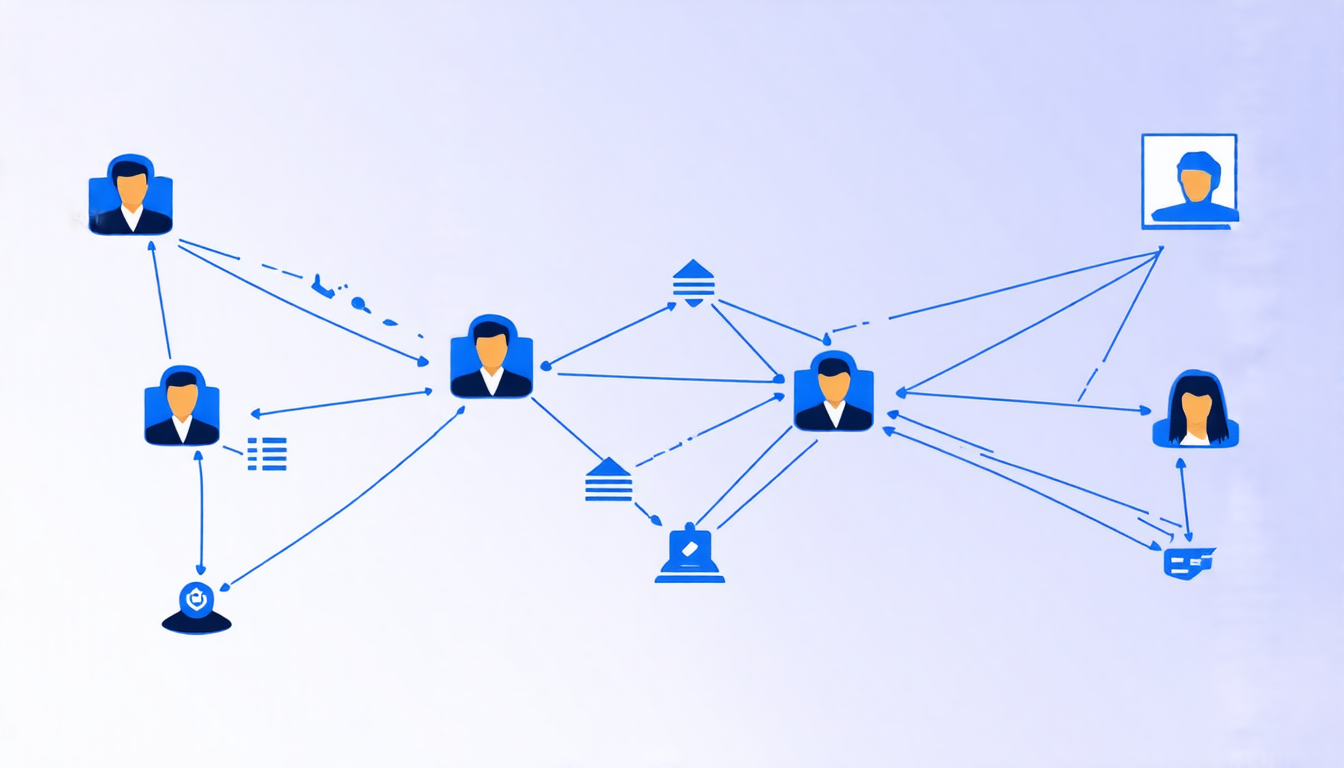

The quest for a perfect matchmaking algorithm has been ongoing in the world of computer science, particularly in the field of dynamic matching networks. The problem is simple: given a set of agents and their preferences, how do you match them in a way that maximizes overall satisfaction? Sounds easy enough, but it turns out to be quite challenging.

The traditional approach to solving this problem has been through static planning, where you plan ahead and determine the matches based on the initial state. However, this approach has its limitations. For instance, what if an agent’s preference changes over time? What if new agents enter the system?

To address these limitations, researchers have turned to dynamic matching networks. In this setting, agents arrive stochastically over time, and the goal is to match them in a way that achieves constant regret at all times. Regret, in this context, refers to the difference between the maximum possible utility and the actual utility achieved by the matches.

A recent study has made significant progress in this area. The authors propose a state-independent greedy policy that achieves constant regret for dynamic matching networks with finitely many agent types. This policy is particularly appealing because it requires less information than traditional state-dependent policies, making it more suitable for real-world applications where data may be limited.

The key insight behind the policy lies in its ability to decouple the matching decision from the state of the system. By doing so, the algorithm can focus on maximizing the overall utility without worrying about the transient states of individual agents. This approach is made possible by a clever use of Lyapunov functions, which provide a mathematical framework for analyzing the stability and performance of the algorithm.

The authors also show that their policy is consistent with traditional static priority policies and longest-queue policies. In other words, if you were to apply these policies in a dynamic setting, they would converge to the proposed state-independent greedy policy as the system stabilizes.

The implications of this research are far-reaching. For instance, it has potential applications in kidney exchange programs, where matching patients with compatible donors can save lives. Other areas such as online education platforms and job matching services could also benefit from this algorithm.

While there is still much work to be done in perfecting the art of matchmaking, this study represents a significant step forward in our understanding of dynamic matching networks. As researchers continue to push the boundaries of what is possible, we may one day see the development of algorithms that can match agents with unprecedented precision and efficiency.

Cite this article: “Optimal Matching Policies in Stochastic Queueing Systems: A Regret Analysis”, The Science Archive, 2025.

Dynamic Matching Networks, Matchmaking Algorithm, Computer Science, Static Planning, Dynamic Systems, Stochastic Arrivals, Constant Regret, Lyapunov Functions, State-Independent Greedy Policy, Optimization Problem