Saturday 19 April 2025

The quest for efficient and effective control systems has been a long-standing challenge in the fields of robotics, automation, and artificial intelligence. Recently, researchers have made significant progress in developing novel methods that combine model predictive control (MPC) with reinforcement learning (RL). This fusion of techniques aims to create more robust and adaptable control systems that can learn from their environment and adapt to changing conditions.

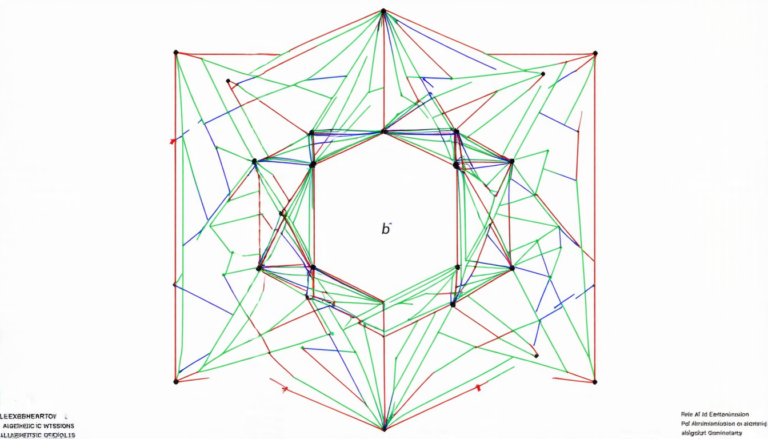

One such approach is the Policy-Enhanced Partial Tightening (PEPT) method, which integrates RL policies into MPC frameworks. By leveraging the strengths of both disciplines, PEPT enables controllers to optimize performance while minimizing computational complexity. This is particularly important in real-time applications where fast decision-making is crucial.

The key innovation behind PEPT lies in its use of a learned RL policy as an approximation for the terminal cost function. This allows the controller to focus on optimizing the current control action rather than trying to predict the future outcome. By reducing the complexity of the problem, PEPT can efficiently solve larger and more complex optimization problems.

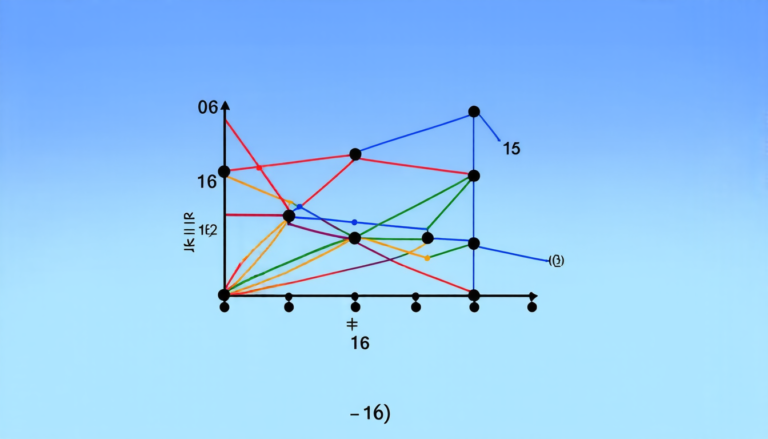

The researchers tested PEPT on a nano-quadcopter tracking task, where they compared its performance with standard MPC approaches. The results showed that PEPT achieved comparable or even better tracking costs while maintaining lower constraint violation rates. Additionally, PEPT exhibited fewer solver failures than traditional methods.

One of the notable aspects of PEPT is its flexibility in initialization strategies. By using different rollout policies, controllers can tune their reliance on RL-based predictions. This adaptability allows designers to balance exploration-exploitation trade-offs and tailor the controller’s behavior to specific applications.

The future prospects for PEPT are promising, as it has the potential to be applied to a wide range of domains, including robotics, autonomous vehicles, and industrial automation. By combining the strengths of MPC and RL, researchers can create more intelligent and responsive control systems that can learn from their environment and adapt to changing conditions.

In this context, PEPT offers a significant step forward in the development of efficient and effective control systems. Its ability to integrate learned policies into MPC frameworks has the potential to revolutionize the field by enabling controllers to optimize performance while minimizing computational complexity.

Cite this article: “Boosting Model Predictive Control with Reinforcement Learning: A New Paradigm for Efficient Nonlinear Control”, The Science Archive, 2025.

Model Predictive Control, Reinforcement Learning, Policy-Enhanced Partial Tightening, Pept, Optimization, Robotics, Automation, Artificial Intelligence, Control Systems, Nano-Quadcopter, Tracking Task