Tuesday 22 April 2025

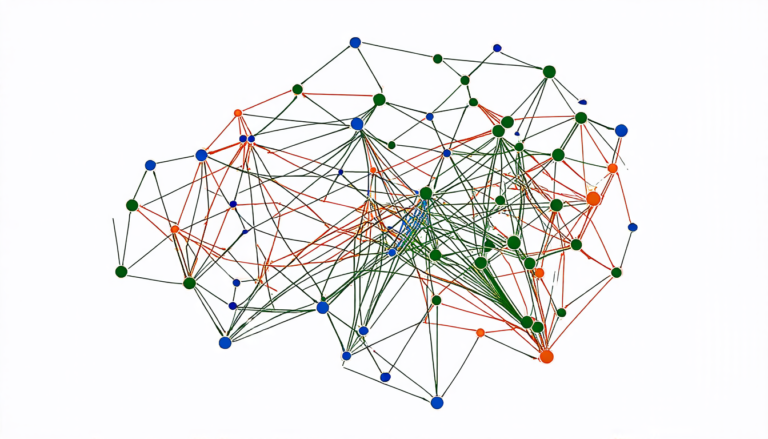

The quest for a more efficient and effective way to train machine learning models has been an ongoing challenge in the field of artificial intelligence. Researchers have proposed various solutions, including Federated Learning (FL), which enables multiple devices or organizations to jointly learn from their local data without sharing it with each other.

However, FL is not without its limitations. One major issue is the problem of non-identical and independent distribution (non-IID) of data across devices, which can lead to models being biased towards the data characteristics of certain devices. This can result in reduced accuracy when aggregated.

To address this challenge, researchers have turned to Neural Architecture Search (NAS), a technique that enables machines to automatically design neural networks for specific tasks. By combining NAS with FL, they aim to create more effective and efficient models that can adapt to diverse data distributions.

A recent study proposes a novel approach called FedMetaNAS, which integrates MAML (Model-Agnostic Meta-Learning) and soft pruning to optimize the architecture of neural networks in FL settings. The authors claim that their method outperforms existing approaches by reducing the total process time by over 50%.

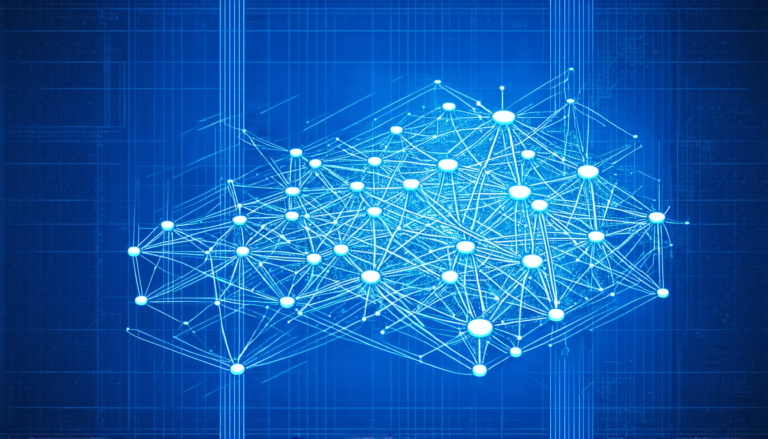

The key innovation behind FedMetaNAS is its ability to relax the search space using a technique called Gumbel-Softmax. This allows for the sparsification of mixed operations in the search phase, enabling the model architecture to converge to a one-hot representation.

Additionally, FedMetaNAS employs a dual-learner system, where a task learner is tailored to individual tasks and a meta learner uses gradient information from the task learner to refine the global model. This approach enables the model to adapt to diverse data distributions and reduce the impact of non-IID data.

The authors also applied soft pruning to input nodes using the same relaxation trick, allowing the model architecture to converge to a one-hot representation during the search process.

Experimental results demonstrate that FedMetaNAS outperforms existing methods in terms of accuracy and efficiency. The method achieves significant improvements on several benchmark datasets, including CIFAR10 and MNIST, with reduced total process time.

The implications of this research are far-reaching. By enabling more effective and efficient training of neural networks in FL settings, FedMetaNAS has the potential to accelerate the development of AI applications in various fields, from healthcare to finance.

While there is still much work to be done, the authors’ innovative approach offers a promising solution to the challenges facing FL.

Cite this article: “Unlocking Efficient Federated Learning through Meta-NAS and Pruning”, The Science Archive, 2025.

Machine Learning, Artificial Intelligence, Federated Learning, Neural Architecture Search, Non-Identical And Independent Distribution, Model-Agnostic Meta-Learning, Soft Pruning, Gumbel-Softmax, Dual-Learner System, Efficiency