Wednesday 16 July 2025

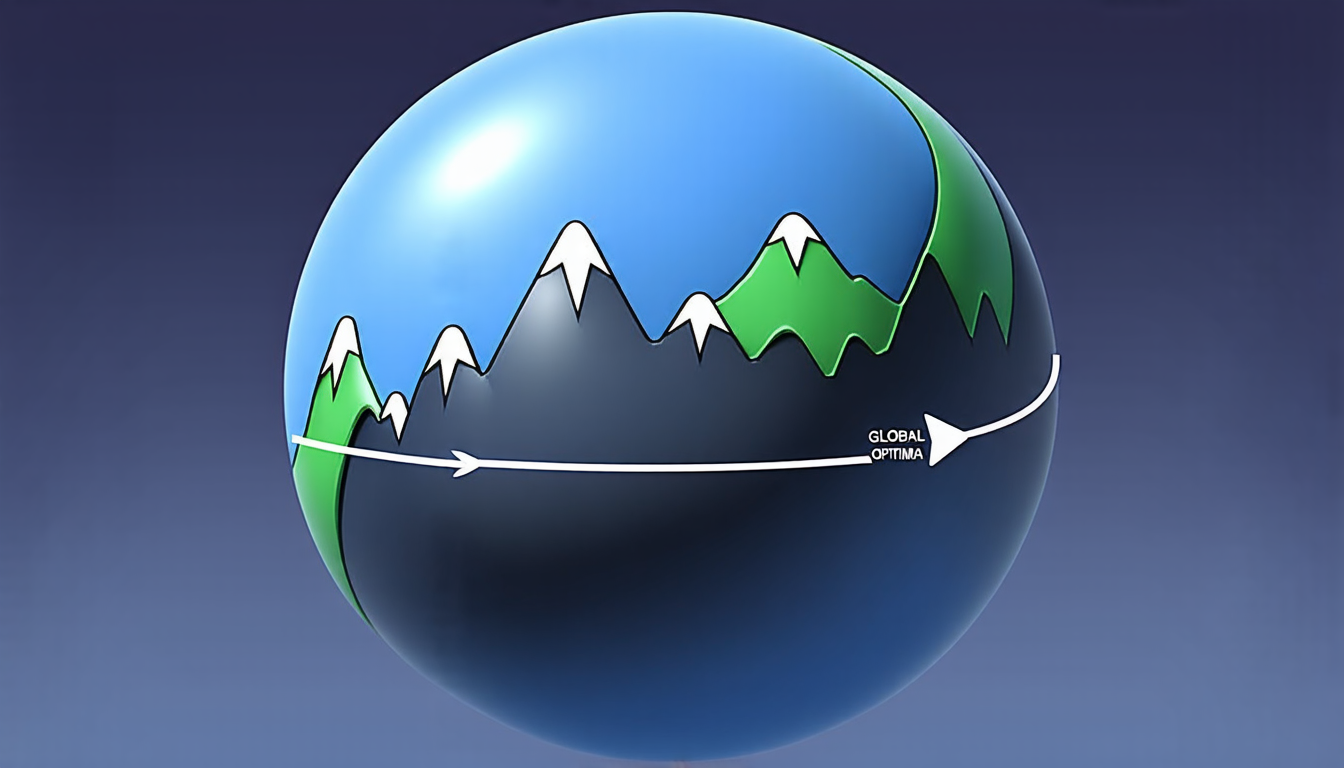

A new approach to optimizing complex mathematical problems has been proposed, one that promises to overcome a major hurdle in solving these types of equations: finding the global maximum.

The problem is this: when trying to maximize or minimize a function, it’s easy to get stuck in local maxima or minima, which are not necessarily the global optimum. This can be particularly frustrating when dealing with complex functions that have multiple local optima.

One way to avoid getting stuck is by using homotopy methods, which involve gradually transforming the optimization problem into a simpler one. The idea is that if you can find a path from the original problem to a simpler one, and then use an optimization algorithm to find the maximum or minimum of the simpler problem, you’ll likely end up at the global optimum.

The authors of this paper have developed a new homotopy method called probability-one homotopy (pHOM), which is designed specifically for solving problems like the sum of Rayleigh quotients and generalized Rayleigh quotients on the unit sphere. These types of problems arise in various fields, including signal processing, machine learning, and physics.

The key innovation here is that pHOM uses a probability-one condition to guarantee convergence to the global optimum. This means that as long as the initial guess is chosen correctly, the algorithm will always find the global maximum or minimum.

To test the effectiveness of pHOM, the authors compared it to several other optimization algorithms on a set of 23,000 randomly generated problems. The results were impressive: pHOM was able to converge to the global optimum in every case, while the other algorithms failed to do so in many cases.

The algorithm’s performance is also remarkable considering its computational efficiency. In fact, pHOM was found to be faster than many of the other algorithms tested, and it required fewer function evaluations.

One potential application of this work is in the field of signal processing, where pHOM could be used to optimize the design of filters or other signal processing systems. Another potential application is in machine learning, where pHOM could be used to optimize the parameters of a model.

Overall, this paper presents an exciting new approach to optimization that has the potential to make significant advances in many fields. By providing a reliable and efficient way to find global optima, pHOM could revolutionize the way we solve complex mathematical problems.

Cite this article: “Probability-One Homotopy Method for Global Optimization”, The Science Archive, 2025.

Mathematics, Optimization, Homotopy Methods, Probability-One Condition, Global Optimum, Signal Processing, Machine Learning, Physics, Algorithms, Efficiency