Sunday 27 July 2025

The quest for fairness in artificial intelligence has taken a crucial step forward. Researchers have been investigating how different methods of evaluating bias in language models can lead to disparate results, and their findings are shedding light on the importance of transparency and consistency in AI development.

Language models, also known as large language models (LLMs), have revolutionized the way we interact with technology. These AI systems can understand and generate human-like text, making them a crucial component in applications ranging from customer service chatbots to language translation software. However, their ability to learn from vast amounts of data has raised concerns about bias and unfairness.

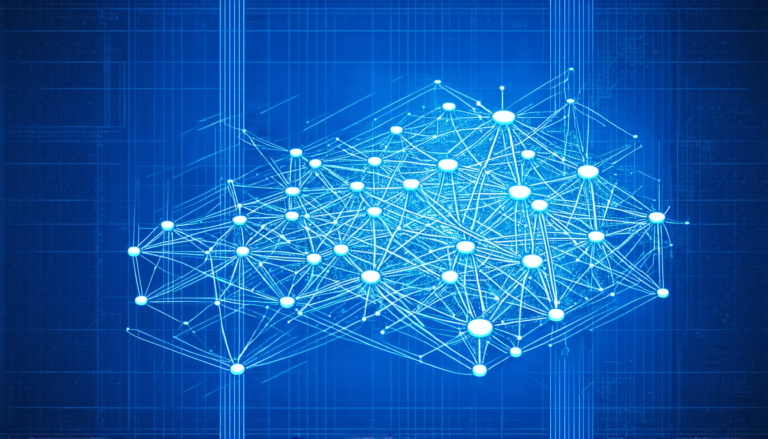

One major issue is that LLMs are trained on datasets that often reflect the social and cultural biases of the people who created them. This means that models may perpetuate harmful stereotypes or reinforce existing inequalities. For example, a language model trained on a dataset dominated by men’s voices may be more likely to use male-centric language and less familiar with women’s experiences.

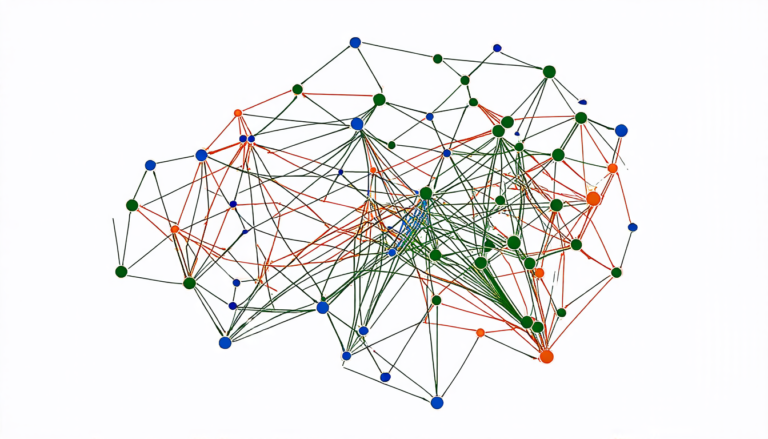

To address this problem, researchers have developed various methods for evaluating bias in LLMs. These approaches include analyzing the models’ performance on specific tasks, such as generating text that is free from gender or racial biases. However, a recent study has found that different evaluation methods can produce vastly different results, leading to confusion and uncertainty about which model is truly fair.

The researchers used a range of language models and evaluated them using various bias metrics. They found that some models performed well on one metric but poorly on another, highlighting the need for a more comprehensive understanding of how these systems are developed and tested.

One potential solution is to use multiple evaluation methods in tandem. This approach can provide a more complete picture of a model’s biases and help developers identify areas where improvements are needed. Additionally, researchers are exploring new techniques for training LLMs that prioritize fairness and transparency from the outset.

The development of fairer AI systems has significant implications for many areas of society, including education, healthcare, and finance. As we continue to rely on these technologies to make decisions and provide services, it is essential that they are designed with fairness and equity in mind.

The study’s findings underscore the importance of transparency and consistency in AI development. By using multiple evaluation methods and prioritizing fairness from the beginning, researchers can create language models that are not only more accurate but also more just. As we move forward in this rapidly evolving field, it is crucial that we prioritize fairness and equity in our pursuit of technological innovation.

Cite this article: “Fairness in AI: The Quest for Transparency and Consistency”, The Science Archive, 2025.

Language Models, Artificial Intelligence, Bias, Fairness, Transparency, Consistency, Machine Learning, Algorithms, Evaluation Methods, Equity.