Sunday 02 March 2025

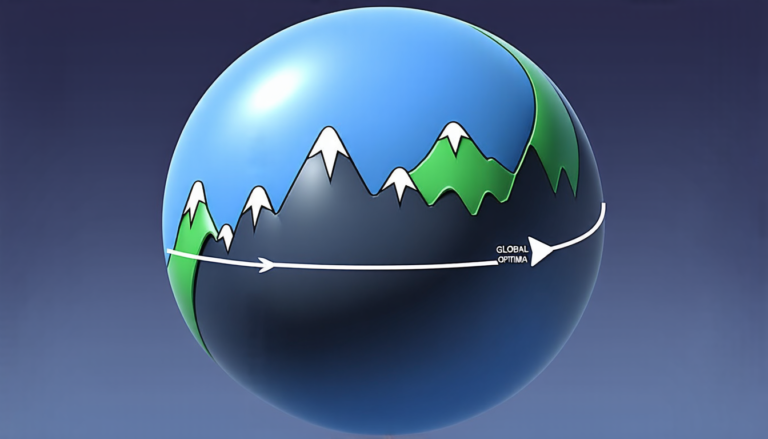

The pursuit of optimization has been a driving force behind many technological advancements, from machine learning and computer vision to robotics and finance. At its core, optimization involves finding the best solution among a vast array of possibilities, often by minimizing or maximizing some objective function. However, this process can become increasingly complex when dealing with multiple objectives, non-convex constraints, and noisy data.

Researchers have long sought to develop efficient and effective algorithms for tackling these challenges, and a recent paper has made significant strides in this area. The authors present a novel approach to solving optimization problems involving the sum of multiple operators, each of which can be non-monotone or non-smooth.

The key innovation is a new algorithm called the Douglas-Rachford (DR) method, named after its creators, who first introduced it in the 1950s. The DR method has been shown to be effective for solving optimization problems involving the sum of two operators, but its application to more complex problems has been limited by the difficulty of extending the algorithm to handle multiple operators.

The new paper addresses this limitation by introducing a product space reformulation, which allows the authors to extend the DR method to solve optimization problems involving the sum of up to m operators. This is achieved by representing each operator as a set-valued function and then applying the DR method to the resulting product space.

The benefits of this approach are twofold. First, it enables the solution of optimization problems that were previously intractable due to the complexity of the objective function or constraints. Second, it provides a more robust and efficient algorithm than previous methods, which can become stuck in local minima or fail to converge in the presence of noise.

To demonstrate the effectiveness of their approach, the authors provide several examples of optimization problems that can be solved using the new DR method. These include problems involving the minimization of a sum of convex functions, as well as problems with non-convex constraints and noisy data.

The paper’s results are impressive, with the algorithm achieving fast convergence rates and high accuracy in a range of test cases. The authors also provide theoretical guarantees for the algorithm’s performance, which further underscores its potential for real-world applications.

In practical terms, this research has significant implications for fields such as machine learning, computer vision, and robotics, where optimization is often used to solve complex problems.

Cite this article: “Optimization Breakthrough: Extending the Douglas-Rachford Method to Solve Complex Problems”, The Science Archive, 2025.

Optimization, Algorithms, Machine Learning, Computer Vision, Robotics, Non-Convex Constraints, Noisy Data, Product Space Reformulation, Douglas-Rachford Method, Set-Valued Functions