Sunday 23 March 2025

Researchers have long sought to improve the ability of language models to reason and solve complex problems. While these models have made tremendous strides in recent years, they still struggle to tackle tasks that require multi-step reasoning and planning. A new approach, Policy Guided Tree Search (PGTS), aims to bridge this gap by integrating reinforcement learning with structured tree exploration.

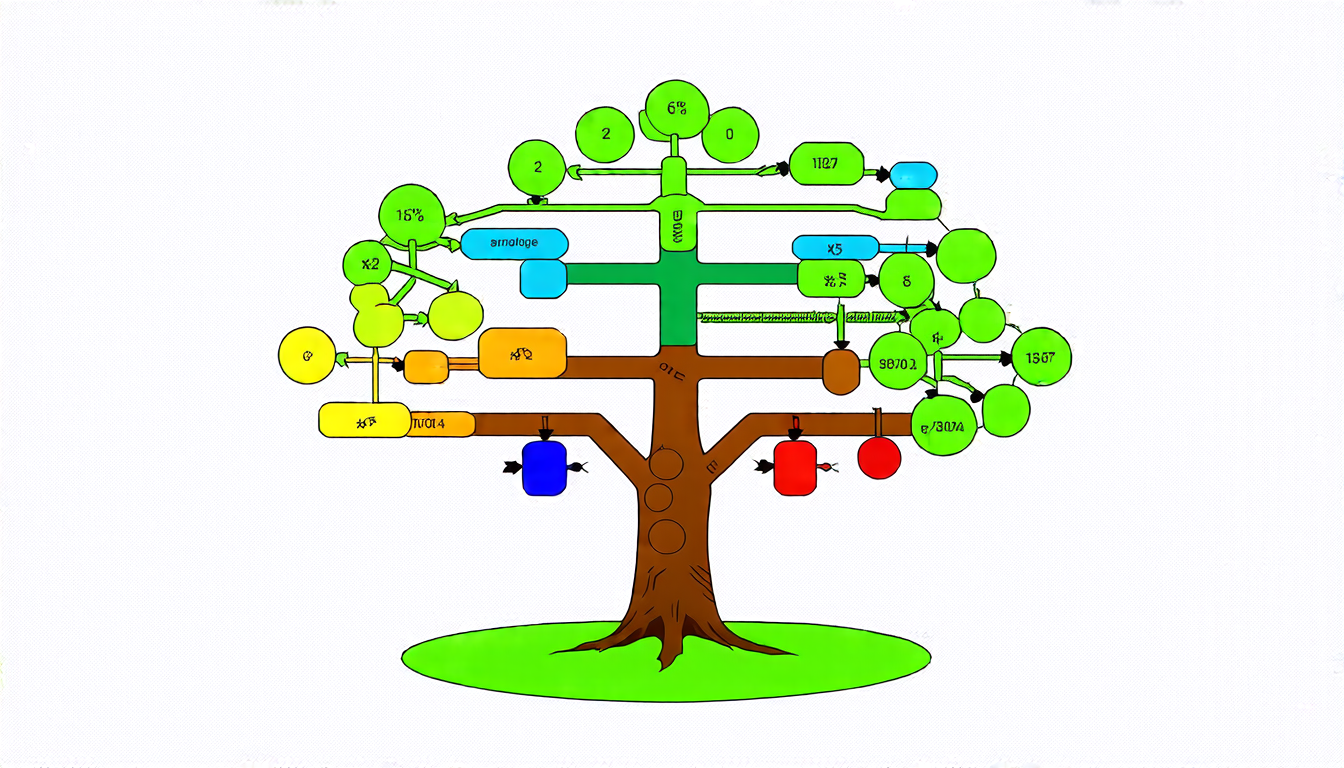

The core idea behind PGTS is to dynamically model the reasoning process as a graph, where each node represents a partial solution and edges signify transitions between them. The policy network, which is trained using Proximal Policy Optimization, predicts both actions and values based on the current node’s features and edge rewards. This approach allows for more efficient exploration of the reasoning space, reducing the need for manual heuristics or exhaustive search.

To evaluate PGTS, researchers tested it on a range of datasets, including GSM8K, MATH, PrOntoQA, and StrategyQA. These benchmarks cover a diverse set of problems, from mathematical word problems to logical reasoning challenges. The results are impressive: PGTS outperforms existing methods in terms of both accuracy and computational efficiency.

One key advantage of PGTS is its ability to adapt to different problem types and complexities. By learning to model the reasoning process as a graph, the policy network can effectively navigate complex scenarios and adjust its exploration strategy accordingly. This flexibility allows PGTS to tackle problems that would be difficult or impossible for other methods to solve.

PGTS also offers several practical benefits. For example, it can be used to enhance existing language models by providing them with a more effective way of reasoning about complex tasks. Additionally, the approach can be adapted to different domains and applications, such as natural language processing, computer vision, and robotics.

While PGTS is an impressive achievement, there are still challenges to overcome before it can be widely adopted. For instance, the approach requires large amounts of training data and computational resources, which can be a barrier for some organizations. Additionally, PGTS may not generalize well to all problem types or domains, requiring further research and development.

Despite these challenges, PGTS represents an important step forward in the quest to create more intelligent and capable language models. By integrating reinforcement learning with structured tree exploration, researchers have created an approach that is both effective and efficient. As the field continues to evolve, it will be exciting to see how PGTS and similar approaches are used to tackle even more complex challenges and applications.

Cite this article: “Policy Guided Tree Search: A New Approach to Complex Reasoning in Language Models”, The Science Archive, 2025.

Language Models, Reinforcement Learning, Tree Search, Policy Guided Tree Search, Pgts, Proximal Policy Optimization, Graph Modeling, Reasoning Process, Complex Problem Solving, Computational Efficiency

Reference: Yang Li, “Policy Guided Tree Search for Enhanced LLM Reasoning” (2025).