Tuesday 03 June 2025

The quest for efficient and private machine learning has led researchers down a rabbit hole of innovative solutions. The latest development in this field is a novel approach to distributed learning, where devices can collaborate to train models without compromising on data security or communication efficiency.

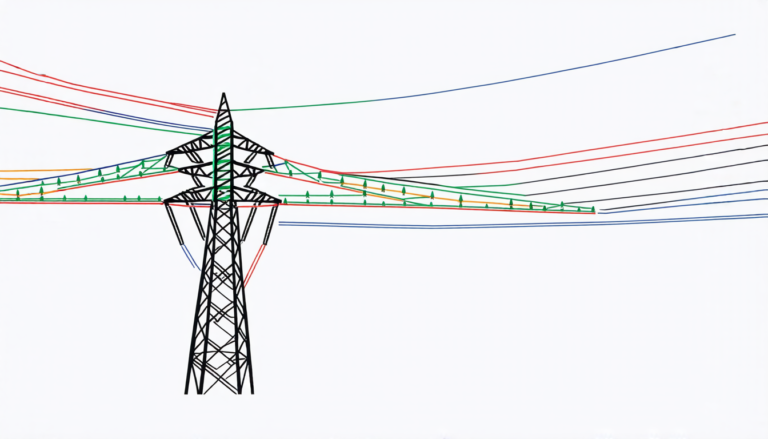

At its core, the method relies on a clever combination of techniques to enable decentralized training while minimizing the need for data exchange. By employing a hierarchical lossy compression scheme, the system can reduce the amount of information transmitted between devices, thus reducing latency and energy consumption.

But what does this mean in practice? Let’s break it down. In traditional distributed learning setups, each device would typically transmit its entire dataset to a central server or other devices for training. This approach has several drawbacks: it requires significant amounts of bandwidth, storage, and computational resources; it may compromise data privacy due to the need for data aggregation; and it can lead to imbalanced models when devices have varying levels of training data.

The new method tackles these issues by introducing a novel lossy compression scheme that selectively discards information from each device’s dataset. This allows devices to transmit only the most essential details, reducing the amount of data needed to train the model. The hierarchical structure ensures that more critical information is preserved and transmitted first, while less important data can be discarded or compressed.

The system also incorporates a mechanism for adaptively adjusting the compression level based on the device’s available resources and the learning task at hand. This adaptability enables devices with limited capabilities to participate in the training process without being overwhelmed by the computational demands.

But what about security? Can this system truly protect sensitive data while still allowing devices to collaborate? The answer is yes. By leveraging lattice coding techniques, the method ensures that even if an attacker were able to intercept and analyze the compressed data, they would not be able to recover the original information. This adds an additional layer of protection against potential security threats.

The implications of this work are far-reaching. It has the potential to enable more widespread adoption of distributed learning in industries where data privacy is a top concern, such as healthcare or finance. It could also lead to more efficient use of resources in edge computing scenarios, where devices need to collaborate to train models without relying on centralized infrastructure.

As researchers continue to push the boundaries of machine learning and data science, innovations like this hierarchical lossy compression scheme will play a crucial role in shaping the future of AI development.

Cite this article: “Secure and Efficient Distributed Machine Learning with Hierarchical Lossy Compression”, The Science Archive, 2025.

Machine Learning, Distributed Learning, Data Security, Compression Scheme, Hierarchical Structure, Lattice Coding, Edge Computing, Resource Efficiency, Data Privacy, Decentralized Training

Reference: Natalie Lang, Alon Helvitz, Nir Shlezinger, “Memory-Efficient Distributed Unlearning” (2025).