Thursday 26 June 2025

Researchers have made significant progress in the field of optimization, developing new methods that can tackle complex problems more efficiently than ever before.

Optimization is a crucial process in many fields, from computer science to economics and engineering. It involves finding the best solution among many possible options, often with constraints and limitations. In recent years, researchers have been working on developing new algorithms that can optimize solutions faster and more accurately.

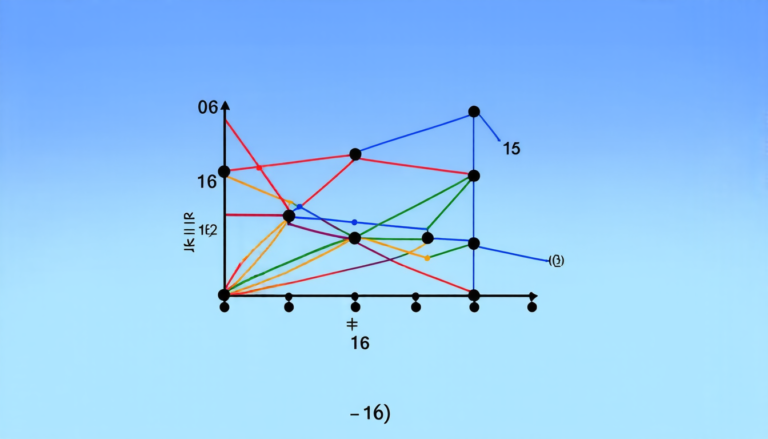

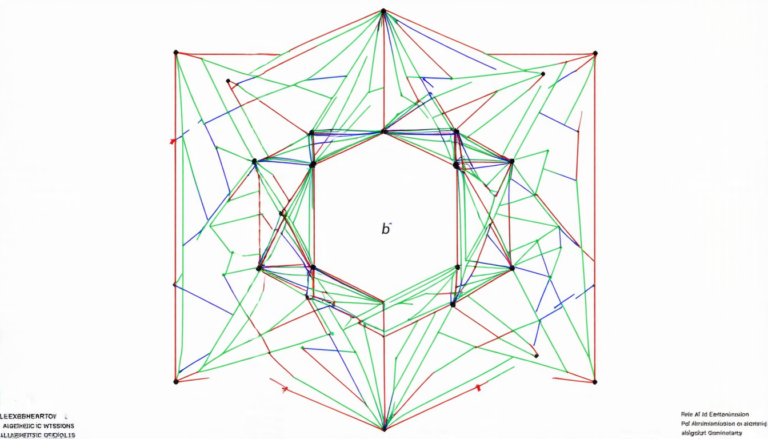

One of the key breakthroughs has come from the development of mirror descent methods. These methods use a clever trick to speed up the optimization process by exploiting the properties of convex functions. By doing so, they can find the optimal solution in fewer iterations than traditional methods.

The researchers behind this work have been able to generalize these mirror descent methods to non-smooth and non-Lipschitz optimization problems. This is significant because many real-world problems don’t fit neatly into the smooth, Lipschitz-continuous category that traditional methods assume.

For example, consider a company trying to optimize its production schedule to minimize costs while meeting demand. The problem might involve complex constraints and non-linear relationships between variables, making it difficult to model using traditional optimization techniques.

The new mirror descent method can tackle this type of problem by incorporating the non-smooth and non-Lipschitz nature of the real-world data into the algorithm. This allows it to find a more accurate optimal solution in fewer iterations than traditional methods.

Another important aspect of this research is its potential impact on machine learning. Optimization is a crucial component of many machine learning algorithms, and the new mirror descent method could be used to improve the performance of these algorithms.

The researchers have also demonstrated the effectiveness of their algorithm through numerical experiments, showing that it outperforms traditional methods in terms of convergence rate and accuracy.

Overall, this research represents an important step forward in optimization theory and has significant implications for many fields. By developing more efficient and accurate optimization methods, we can tackle complex problems more effectively and make progress on a wide range of challenges.

Cite this article: “Breaking New Ground: Mirror Descent Methods Revolutionize Optimization Theory”, The Science Archive, 2025.

Optimization, Mirror Descent, Algorithms, Convex Functions, Non-Smooth, Non-Lipschitz, Machine Learning, Optimization Problems, Production Scheduling, Numerical Experiments