Sunday 27 July 2025

Artificial intelligence has long been shrouded in mystery, leaving many wondering how these intelligent machines make their decisions. While AI systems can process vast amounts of data and learn from it, the way they arrive at a particular conclusion remains opaque to humans. Now, researchers have made a significant breakthrough in understanding how artificial neural networks, the building blocks of AI, make predictions.

The team discovered that by examining specific paths within these networks, they can uncover why a particular decision was made. This novel approach, called pathwise explanation, reveals the intricate details of how an AI system arrives at its conclusion. By following a specific path through the network, researchers can identify which features or inputs contributed most significantly to the outcome.

The breakthrough is significant because it allows humans to better understand the decision-making process behind AI systems. This newfound transparency will enable developers to improve these machines by identifying biases and inaccuracies in their training data. Furthermore, this technology has potential applications beyond AI, such as improving medical diagnosis and financial forecasting.

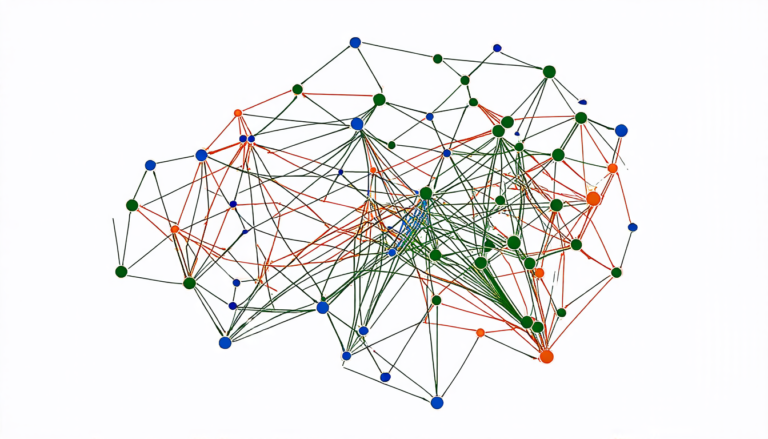

To achieve this understanding, researchers developed a new method that involves analyzing how neural networks respond to different inputs. They created a piecewise linear model that can be applied to any activation function used within the network. This allowed them to examine the relationship between an input and the decision-making process in greater detail.

The team tested their approach on several datasets, including images from the ImageNet database and data from the CIFAR10 dataset. Their results showed that pathwise explanation outperformed other methods in terms of accuracy and consistency. The method even performed well when applied to a low-resolution dataset, such as CIFAR10, where object recognition is particularly challenging.

The visualizations generated by this approach provide a clear representation of how AI systems make decisions. For instance, in an image classification task, the algorithm can highlight specific features that contributed most significantly to the predicted class. This level of transparency will be crucial for building trust between humans and artificial intelligence.

While this breakthrough has significant implications for the development of AI, it also highlights the importance of understanding how complex systems make decisions. As we continue to rely more heavily on AI in various aspects of our lives, it is essential that we can comprehend the underlying logic driving these machines. The researchers’ pathwise explanation method has taken us one step closer to achieving this goal.

Cite this article: “Unlocking the Decisions Made by Artificial Intelligence”, The Science Archive, 2025.

Artificial Intelligence, Neural Networks, Decision-Making Process, Pathwise Explanation, Transparency, Machine Learning, Image Classification, Feature Extraction, Trust, Understanding Complex Systems.